How the polls did in the 2021 election

Pollsters were close to the mark, again

There’s little fanfare when a plane lands safely — that is what is supposed to happen. It’s the same with the polls. I’m never asked to comment on polls more than when they miss the mark. When they are on target everybody moves on.

But not me! Instead, it is time to delve into how well the polls did in the 2021 federal election. The answer is: pretty darn good.

There was a lot of polling being done in this election, with Wikipedia recording 154 polling releases over the 36-day campaign for an average of 4.3 per day. Granted, three of those were the daily releases by Nanos, EKOS and Mainstreet, but that is still a lot of polling and a lot more than in 2019, when the tally was under 120 for the entire campaign.

In case you missed it Monday:

Overall, the polls were very accurate. The final surveys conducted by each of the pollsters in the field in the last week averaged a literal tie between the Liberals and the Conservatives. In the end, the margin was 1.1 percentage points. That’s about as close as you can reasonably expect to get and better than 2019’s alreayd-small error of 1.5 points in the Conservative-Liberal margin.

By comparison, the national polls in the U.S. presidential elections have been off by about two to four points over the last three elections.

Léger repeats as pollster champion

But let’s break it down into detail. First, we’ll take a look at who got closest to the mark at the national level.

(For comparison purposes, I’ve included the results of my Poll Tracker for the CBC. I’ve also rounded off all the numbers, but the error calculations take into account the first decimal point in the results and when reported by the pollster.)

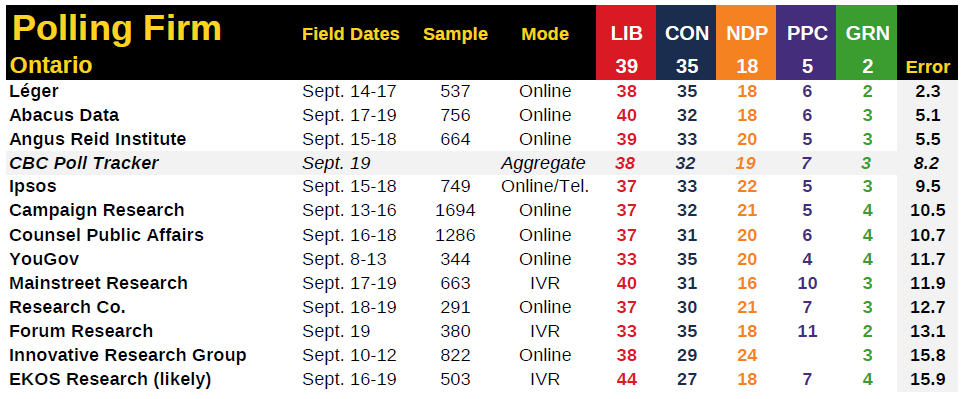

For the second consecutive election, the award for most accurate poll goes to Léger. The total error across the six parties was just 4.5 percentage points, making Léger the only pollster to have an average error of less than one point per party.

Research Co. and Nanos Research get the silver and bronze while Abacus Data, the Angus Reid Institute and Ipsos had very good showings as well. These were the pollsters who were closer to the mark than the CBC Poll Tracker aggregate.

Some other pollsters struggled a bit more, particularly those who did not put out a poll in the final week (Innovative Research Group and YouGov) and the three IVR pollsters. The source of their error was primarily the significant over-estimation of the PPC and the under-estimation of the Conservatives.

Just as in 2019, both the Conservatives and Liberals were under-estimated in the polls, this time by about 1.2 points for the Liberals and 2.3 points for the Conservatives. No pollster over-estimated Conservative support or even put them at 34%. No pollster over-estimated the Liberals, either, but a few had them at 33%.

Nearly across the board, just as in 2019, the New Democrats were over-estimated, though in this case by a more modest 1.1 points (it was 2.7 points in 2019). The online pollsters tended to be further from the mark for the NDP than the IVR or telephone pollsters.

The Greens were over-estimated by 1.1 points, perhaps due entirely to their lack of a full slate. If you cut their share of the vote by their 25% vacancy rate, the over-estimation drops to just 0.3 points.

Finally, the People’s Party was a big problem for a lot of pollsters. They were over-estimated by 1.9 points, nearly as much as the Conservatives but that represents a proportionally bigger error. The IVR pollsters had the PPC at about 10%, or double their actual support, while the online pollsters had them around 6%, only a point above their actual result. In the end, the debate over who was accurately capturing PPC support has been answered — and it wasn’t the IVR pollsters. This puts into doubt some of the narratives about the PPC’s surging support, which at certain points of the campaign had the PPC in double-digits in a number of regions.

Ipsos, Léger top the regional breakdowns

Canada’s elections are decided at the regional or provincial levels — and some pollsters did better than others beyond the national toplines.

Some regions were easier to call, though that does seem to be influenced somewhat by sample size. Ontario, Quebec and British Columbia, which routinely have the largest sample sizes, averaged the lowest error at about 1.9 points per party per pollster.

That error ballooned to over three points per party in Alberta, Atlantic Canada and the Prairies, where samples are smaller.

Let’s start with where the polls were most accurate, that supposedly volatile province where only one seat ended up changing hands: Quebec.

The only Quebec-based pollster nailed it in Quebec, as Léger totalled just four points of error and got every party to within one percentage point.

The Liberals and Bloc were both largely under-estimated, while the Conservatives also beat their polls a bit in Quebec. The NDP under-performed, as did the PPC and Greens.

But the polls here were very good, with all but three pollsters averaging an error of less than two points per party, the best result in the country.

Léger was again the top performer in Ontario, with a minuscule total error of 2.3 points, or 0.5 points per party. A one-point under-estimation of the Liberals and a one-point over-estimation of the PPC was the only blemish.

Abacus and the ARI also performed well here, while Ipsos was the only other pollster to have an average error of less than two points per party. The biggest miss in Ontario was the average under-estimation of the Conservatives by about three points.

Here again in B.C., Léger was first among pollsters. Getting closest to the mark in the three biggest provinces is largely why Léger had the best numbers nationally, and here the error was just 0.6 points per party. Ipsos and B.C.-based Research Co. were also very close, with Mainstreet getting under the bar of an average error of two points per party as well.

Though the polls were off a little for all the parties, the biggest error here was the under-estimation of the Liberals by about two points. This might have been why the party’s seat performance in the province came as a bit of a surprise. Interestingly, support for the PPC was not greatly over-estimated here.

Now we move into more challenging territory. Ipsos was closest in Alberta, though with an error of 1.9 points per party. Abacus and Counsel Public Affairs were not much further behind.

The IVR pollsters in particular really struggled here, estimating PPC support at double (or nearly triple in the case of EKOS) of what it actually turned out to be. As a result, the Conservatives were under-estimated by an average of about six points. That is much better than the 10-point miss in 2019, but is still sizable.

In Atlantic Canada, Ipsos was the only pollster to have an error of less than two points per party, followed closely by Léger, Mainstreet and Research Co.

The pollsters struggled with the three biggest parties, under-estimating the Liberals by about three points and the Conservatives by about four points, while over-estimating the NDP by about four points. Hopes that the New Democrats could hold St. John’s East and maybe win Halifax were probably due to this over-estimation. In the end, the two main parties in the region got their vote out (or collected their lapsed vote from the NDP and PPC).

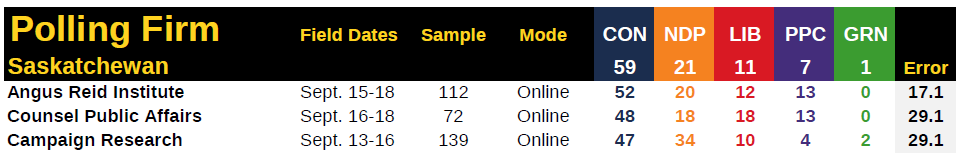

Finally, in the Prairies I’ve separated the pollsters between those that combined Saskatchewan and Manitoba and those that didn’t.

The polls were actually quite on the mark for both the NDP and Liberals, but under-estimated the Conservatives by about seven points — a bigger miss than in Alberta — and over-estimated the PPC by about four.

It was largely the same thing for the three pollsters that didn’t have combined Prairie results — under-shooting the Conservatives in Saskatchewan by a lot and in Manitoba by a little. Overall, it looks like Manitoba was easier to poll than Saskatchewan.

So, who can claim to have the closest regional results? Below, I’ve ranked the pollsters by their average finish in each region, excluding the pollsters that didn’t publicly release regional results (Nanos) and those that weren’t in the field in the last week (YouGov, IRG).

Ipsos takes the cake, averaging a rank of 2.2 across the six regions. It was first in Alberta, Atlantic Canada and the Prairies, second in B.C. and third in Quebec.

Runner-up goes to Léger, who placed first in Ontario, Quebec and B.C. (though extra points should probably be rewarded for calling the battlegrounds correctly) and was second in Atlantic Canada.

Overall, this was a good election for most pollsters and a good election for the industry as a whole. I think the polls accurately portrayed the rhythm of this race, with the daily trackers giving us a blow-by-blow of the trends (and blips, too much attention might have been paid to the blips) and the online pollsters giving us the smoothed-out line that anchored it all.

How the Poll Tracker did

After holding the pollsters accountable, it wouldn’t be fair to not do the same for myself.

The CBC Poll Tracker’s aggregate is, of course, only as good as the polls and so it performed quite well enough, ranking in the middle of the pack nationally and in most regions (as an aggregate normally would). I would have preferred not to have made the same errors as last time — marginal Liberal vote advantage and over-estimating the NDP — but I feel it gave people an accurate portrait of the race.

But what about the seat projections?

Note: This was written before a recount awarded Châteauguay–Lacolle to the Liberals instead of the Bloc on Oct. 6. The actual final count was 160 Liberals and 32 Bloc.

The Poll Tracker was quite close to the mark for the number of seats for every party but the NDP, though the NDP’s 25 seats fell within the 68% confidence interval of the Poll Tracker. I’m also quite pleased with under-shooting the Liberals by only four seats, considering I was 20 seats off for the party back in 2019.

These numbers show the value of seat projections, since the model (and other models) consistently showed that a close national race in the popular vote between the Liberals and Conservatives would not translate into a close seat race. The Liberals never gave up being the favourites to win the most seats, and the final projections gave the Liberals a 57% chance of winning a minority government. The next closest possible result was a 25% shot at a Conservative minority, predicated primarily on the Conservatives beating their polls by more than they actually did.

The Poll Tracker model made projections at the individual riding level, even if they weren’t made public. The model made the right call in 314 of 338 ridings, for an accuracy rating of 93%, a better than 2019’s 87% accuracy. Including those considered toss-ups (the ones represented in the ranges above), the potential winner was identified in 329 of 338 ridings, for an accuracy rating of 97%. Again, that was better than 2019’s 94%, so I’m pleased with that.

Because I think they are revealing of local dynamics, here are the nine calls that the model missed:

Richmond Centre (Safe Conservative won by the Liberals)

Steveston–Richmond East (Likely Conservative won by the Liberals)

West Vancouver–Sunshine Coast–Sea to Sky Country (Likely Conservative won by the Liberals)

Hamilton Mountain (Likely NDP won by the Liberals)

Kitchener Centre (Likely Conservative won by the Greens)

Markham–Unionville (Safe Conservative won by the Liberals)

Coast of Bays–Central–Notre Dame (Safe Liberal won by Conservatives)

Miramichi–Grand Lake (Safe Liberal won by the Conservatives)

Cape Breton–Canso (Safe Conservative won by the Liberals)

Coast of Bays–Central–Notre Dame surprised everyone and I don’t think it is a coincidence that Richmond Centre, Steveston–Richmond East and Markham–Unionville are the three ridings in Canada with the largest Chinese populations. Kitchener Centre was a crapshoot because of the suspension of the Liberal campaign. The loss of NDP incumbent Scott Duvall in Hamilton Mountain might have been impactful there, while the two Maritime misses were likely due to some of the Atlantic sub-samples I was using to make calls in the region. Any explanations for the West Vancouver miss are welcome, though it was rated as a “Likely” rather than “Safe” Conservative win.

But all in all, a good showing from the Poll Tracker and a big improvement over the last election. Now, will this be the last of the Poll Trackers? I haven’t decided yet. But I can’t help think of this: